Do you know the difference between facts and knowledge? Did you know there is a difference?

A main criticism of large language models (LLMs) is that they “hallucinate”. That is, they make up facts that are not true. This bothers most people. It’s bad enough when people get facts wrong, but when computers, trained on the writings of people, manage to invent entirely new wrong facts, people get really concerned. Since LLMs answer with such confidence, they end up being extra untrustworthy.

In the two years since LLMs took the world by storm, we have seen airline chat systems give incorrect discount information to customers, and a court rule that the airline was liable for bad information. We have seen legal LLMs cite court cases that don’t exist with made up URLs that don’t resolve to a valid web site or page.

One way we deal these wrong facts is to insist that people double check any facts an LLM outputs. In practice, many users don’t know about the problem or just don’t do the work.

What is knowledge, if not facts?

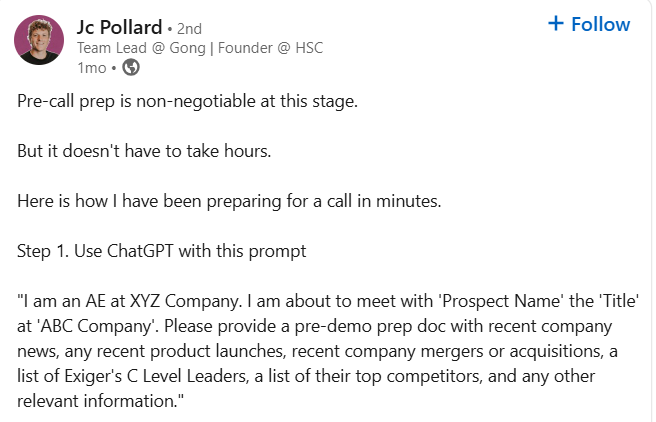

This is the trap in which so many critics of LLMs get caught. The reasoning goes: Since it can’t get the facts right, and makes more work checking and fixing them, it’s not worth asking the LLM. But it turns out it is! Here is the LinkedIn post that changed my mind, dramatically.

If you’ve read this far, you’ll know what sets off any critic: The facts will be wrong, and you, Jc, will look like an idiot coming in prep’d by this ChatGPT document. See the comments. I told him as such.

I left my comment there, and apologized for it a short time later. This was the example I needed to see that the knowledge he sought was not actual facts about the company. He sought the vibe. Factual errors even add more value.

Be the sales guy for a moment. “I understand that ABC is a leader in LMNOP.”

Your prospect replies, “Well, you’re too kind. DEF is the clear market leader at LMNOP, but we feel that our solution is better and we hope to overtake them this year.”

Now you have a conversation. Perhaps XYZ can help ABC improve or sell LMNOP. Perhaps that’s why or adjacent to why you called in the first place. The fact did not matter. The error of fact sparked discussion. The knowledge was the relevance of LMNOP. LLMs are really good at surfacing this knowledge.

At the end of 2023, when I was challenged by a friend and long term business mentor to figure out my AI story, I spoke with a potential client about what they would expect. They expected that I could automate some important process with ChatGPT. I said that in my initial research, ChatGPT doesn’t really work for that.

But I asked ChatGPT to write a story about Eeyore and Paul Bunyan using their flagship backup product to save the forest by “backing it up”. The story was delightful and surfaced many features of their offering in a fictional context. I shared the story with that potential client as an example of what they might use ChatGPT for. They were quite delighted and even more dismissive. I felt like the hero of this commercial.

My hope for today is that y’all’s don’t throw out easy sources of knowledge because they get creative with facts. I also hope that you’ll use private, local LLMs for this, as they are just as good in practice at general knowledge as any LLM in the public cloud. You’ll be pleasantly surprised if you haven’t discovered that yet.

-Brad

Addendum: Despite being in a boxing ring, the Grok generated dogs in the picture, Knowledge and Facts, are not fighting. There’s no need for them to fight. They’re just different animals.